In 2026, AI marks its 70th anniversary as an academic term—a surprising milestone for a field that remained niche until the 2010s. Since then, AI has become a driving force of innovation, powered by vast data, cloud computing, better hardware, and smarter algorithms—igniting a modern Gold Rush. But rapid growth brings risks. The field evolves faster than regulators can react, creating a digital Wild West—data flows replacing shovels and spades. At Sigma Software, we’ve seen this shift up close through a surge in AI project demand.

Most importantly, and why I am writing this – working on various AI projects, we gained valuable insights on AI implementation and governance.

I took some notes, and wanted to share couple of guidelines for anyone building or thinking about building solutions with AI.

There are numerous great use cases

At this point, there are plenty of successful AI use cases, from Amazon saving 4500 developer-years by using AI to perform the transition from Java 7 to Java 19 to Lufthansa in a joint project with Google to optimize flight paths and thus reduce fuel consumption and CO2 emissions.

For instance, Nike has harnessed artificial intelligence (AI) to elevate customer experience through personalized services and product customization. One standout innovation is the Nike Fit tool, which utilizes computer vision, machine learning, and augmented reality to scan customers’ feet using a smartphone camera, providing precise size recommendations. This addresses a significant issue, as over 60% of people wear the wrong shoe size.

Another cool case to mention is the recent experiment from Nigeria, which explored the use of generative AI as a virtual tutor to enhance student learning. Over six weeks between June and July 2024, students participated in an after-school program focusing on English language skills, AI knowledge, and digital literacy. Students who participated in the program outperformed their peers in all assessed areas. Notably, the improvement in English language skills was substantial, with learning gains equivalent to nearly two years of typical schooling achieved in just six weeks.

One story close to my heart is how AI systems are saving the lives of Ukrainian soldiers and civilians. AI-powered technologies are identifying drones and rockets swarming Ukrainian skies, helping to protect cities and mitigate destruction.

But, not everything in the AI world has gone according to plan.

Remember McDonald’s AI Suggesting 260 McNuggets?

In June 2024, McDonald’s ended its trial of AI-powered drive-thru systems developed in partnership with IBM. Launched in 2021, the project aimed to improve order accuracy and speed. However, the system struggled with understanding diverse accents and dialects, leading to frequent errors — including one infamous incident where it suggested an order of 260 McNuggets. Ultimately, McDonald’s discontinued the project by July 26, 2024. Despite this setback, the company remains open to exploring future voice-ordering solutions and continues its partnership with Google Cloud to integrate generative AI into other business areas.

In April 2024, New York City’s AI-powered chatbot, designed to help small business owners, came under fire for providing inaccurate and even unlawful guidance. Instead of simplifying access to essential information, the chatbot suggested solutions that violated local regulations, potentially jeopardizing businesses. This highlights the risks of deploying AI without rigorous testing, as such errors can have serious consequences.

One AI system even screened out job applicants based on age. For instance, AI hiring platforms might target job ads to younger audiences or prioritize resumes with keywords like “junior” or “recent graduate,” unintentionally excluding older, qualified candidates. Ironically, Plato might not even pass the initial screening these days (he carried teaching in his academy until death).

In 2020, a UK-based makeup artist who had been furloughed was asked to reapply for her position. Despite excelling in skills evaluations, she was rejected due to low scores from an AI tool that assessed her body language. This case illustrates how AI systems, especially those trained on non-diverse data, can perpetuate existing biases.

These failures are stark reminders that AI still has a long way to go. They underscore the importance of responsible implementation, ensuring that AI systems are ethical and reliable.

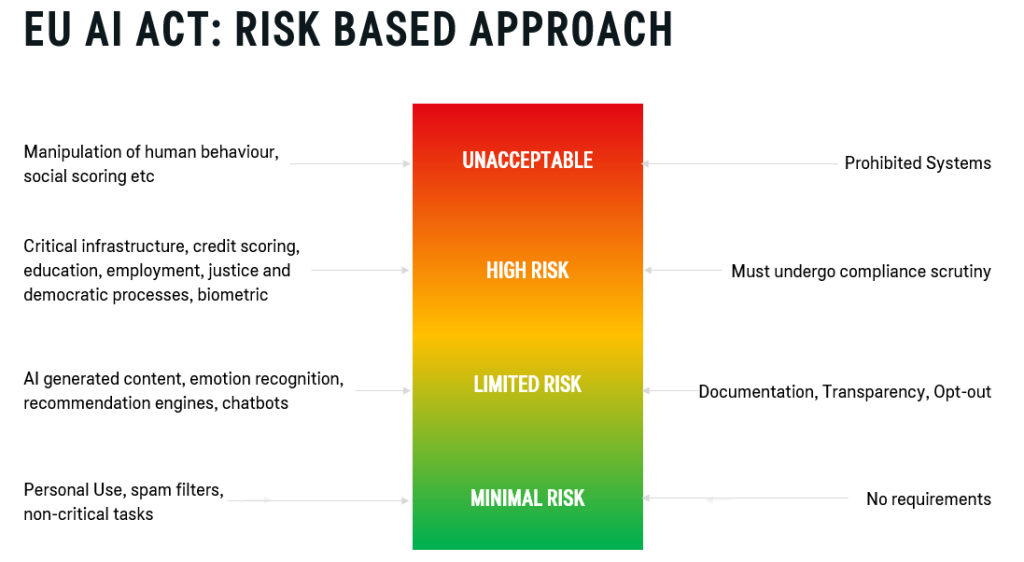

EU AI Act: Risk-Based Approach

You wouldn’t let your kids drive without a license—so why let teams use AI without guidance? Yet many do, exposing private data and code to security risks. We call this Shadow AI.

To address this, the EU introduced the AI Act. Any company using AI for EU data or decision-making must now comply.

The law bans “unacceptable risk” systems like social scoring and biometric categorization. High-risk AI—used in areas like healthcare, justice, or infrastructure—must meet strict requirements, including risk mitigation, transparency, and human oversight. Limited-risk systems like chatbots need clear labeling; low-risk tools face minimal rules. Think of it as AI parenting: high-risk kids need strict boundaries.

The law began rolling out in August 2024, with full enforcement by 2027. Fines for non-compliance can reach 7% of global revenue—so proactive compliance is crucial.

At Sigma Software, we adopted AI early—starting with code-assist tools and building our own solutions like a corporate HR bot, and a legal assistant for document reviews. With the rise of LLMs, we promoted responsible internal use and developed a practical checklist to ensure safe, effective AI adoption.

Checklist for AI Implementation and Governance

Implementing AI is like launching a rocket: you start small, test thoroughly, and then scale. Miss a step, and instead of reaching the stars, you might end up with a costly mess.

So, how to approach AI implementation having in mind all of the possible risks, setbacks and uncertainties?

-

- Where AI can make a tangible impact?

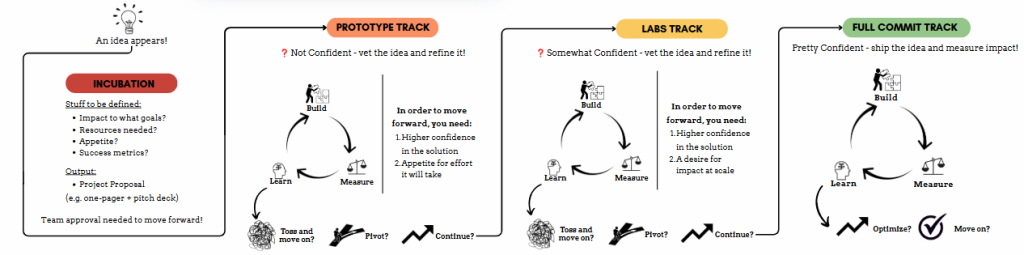

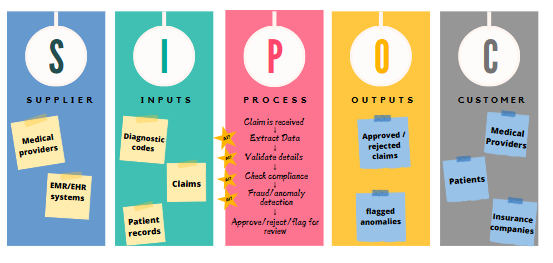

The AI ideation process starts from the incubation phase, during which the owner gathers data to answer crucial business questions before the project can even be considered. Product and process mapping related skills are rather important in this exercise. Example of such tools are Product Canvas for product features or SIPOC for process mapping.

-

- Nurture ideas through testing in controlled environments

After incubation, the project enters Prototype track, during which the team develops basic PoC running on a simple static dataset. We are still unsure whether it works (and in 50-70% of cases it won’t), so the level of resource allocation must be relatively small.

-

- Align successful projects with company goals

Upon successful prototyping the project enters Labs Track. Here we are more confident with the product, but there is still a level of uncertainty, especially considering the fact that going from PoC to MVP will require a significant level of resource allocation. Critical question to ask here is – how well aligned the idea with organizational objectives? Only the most impactful projects must be pursued.

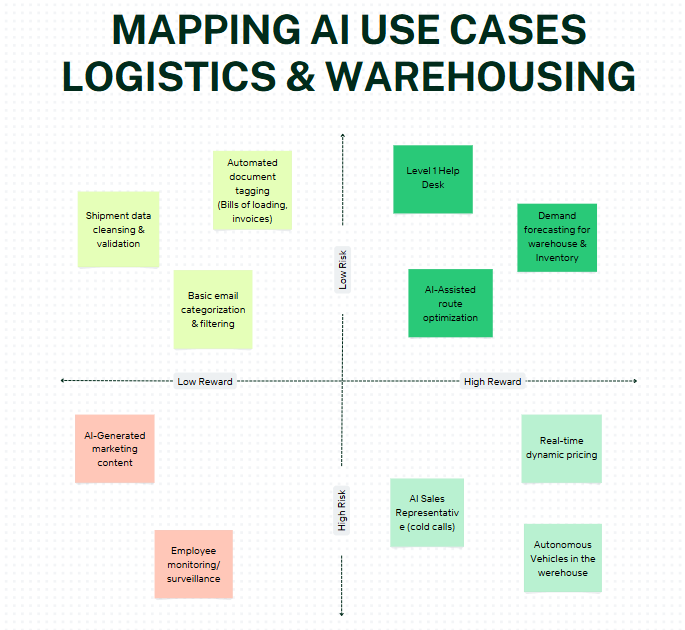

One proven method to prioritize which ideas are worth pushing forward is the risk/reward matrix – mapping your existing ideas on a canvas with Reward and Risk axes.

Here is an example of such mapping that we recently performed for logistics & Warehousing company:

Use cases in the top right corner have 1st priority, as their value is high and risk from introduction is deemed as low. On the opposite side, bottom left corner use cases must be avoided at all costs, as they introduce significant risks without bringing much value.

-

- Launch with agility, adapting as you go

When technical feasibility is confirmed and use cases are aligned with organizational objectives, commit fully and launch them. You should cautiously deploy and follow success metrics continuously.

-

- On the compliance front, start with mapping out where AI is already in use and seek early legal guidance.

This is done to make sure that there is a common understanding of what tools are actively used and create initial whitelists of tools that are approved for usage. This will be important for the next steps.

-

- Then assess data privacy and security, create an AI literacy program and established guardrails for responsible use.

Create AI policies and rules for what is allowed and what is not, and incorporate AI literacy programs into employee continuous development. These cover safe usage, privacy and collaboration techniques for normal users, but are different for power users that may encounter high-risk AI systems in their daily job (compliance, legal, HR, IT)

-

- Prepare by assigning clear responsibilities and building interdisciplinary teams.

AI Governance team must be collected, consisting of legal, compliance, cybersec and IT functions. This enables a wholesome approach towards AI policy.

At the very least topics like human oversight and decision making, approved AI tools, incident reporting, sensitive & personal data, copyright, consent & transparency and individual responsibility should be covered, but this list may be expanded.

-

- Finally, continuously monitor, testing for safety and reliability and establishing clear problem-solving procedures.

Let’s remember that AI tools are vulnerable and can be manipulated. So threats like prompt injection or model/Data poisoning are very real. We recommend employing frameworks like NIST AI RMS and continuously keep yourself updated on OWASP’s list of Top 10 vulnerabilities.

This journey is more than a checklist – it’s about thoughtfully weaving AI into your organization’s fabric, ensuring innovation and compliance go hand in hand.